Libvirt Serial Console Xml

First ensure your libvirt XML contains a fragment like this (my guest, installed using virt-install, already had this). Second, edit /boot/grub/grub.conf inside the guest, adding the console=ttyS0 element to the kernel command line: # virt-edit Guestname /boot/grub/grub.conf. Feb 29, 2012. This is what I normally add to the VMs definition, using virsh edit Then console=ttyS0 appended in the VM's kernel line in grub.conf. Never failed me so far. Host and guest operating system. Create a VM using virt-install or virt-manager In any case you will get serial console statements added to VM.xml file.

Prior to release 7.4, WirelessLAN (WLAN) controller software ran on dedicated hardware you were expected to purchase. The Virtual WirelessLAN Controller (vWLC)runs on general hardware under an industry standard virtualization infrastructure. The vWLC is ideal for small and mid-size deployments with a virtual infrastructure and require an on-premises controller. Distributed branch environments can also benefit with a centralized virtual controller with fewer branches required (up to 200). VWLCs are not a replacement of shipping hardware controllers. The function and features of the vWLC offer deployment advantages and benefits of controller services where data centers with virtualization infrastructure exist or are considered. Advantages of the vWLC • Flexibility in hardware selection based on your requirements.

• Reduced cost, space requirements, and other overheads since multiple boxes can be replaced with single hardware running multiple instances of virtual appliances. • AP SSO HA • N+1 HA (CSCuf38985) Note When an AP moves from one vWLC to another, it may refuse to join the second vWLC.

It occurs when the server hardware fails, or a new instance of vWLCs are created. It is recommended to implement server mirroring scheme at the VMware level such as vMotion or some orchestrator. It is highly recommended to retain a snapshot of the VM instance, one from the mobility domain to which access points have joined previously. Then use the snapshot to start the vWLC instance. Access points then join the vWLC. This method can be also be used for priming access points instead of a physical controller. • PMIPv6 • Workgroup Bridges • Client downstream rate limiting for central switching • SHA2 certificates.

Note vWLC upgrade supports only of the same type (e.g. Small to Small, Large to Large).

Mixed is not supported (e.g. Small to Large, or Large to Small). For installing NEW virtual wireless controllers on VMware, use *.ova. Cisco Wireless LAN Small Scale Virtual Controller Installation with 60 day evaluation license AIR_CTVM-K9_8_2_100_0.ova Cisco Wireless LAN Large Scale Virtual Controller AIR_CTVM_LARGE-K9_8_2_100_0.ova For installing NEW virtual wireless controllers on KVM, use *.iso.

Cisco Wireless LAN Small Scale Virtual Controller Installation with 60 day evaluation license (KVM) MFG_CTVM_8_2_100_0.iso Cisco Wireless LAN Large Scale Virtual Controller Installation with 60 day evaluation license (KVM) MFG_CTVM_LARGE_8_2_100_0.iso. A sample configuration of the Cisco Catalyst interface connection to the ESXi server for the virtual switch as trunk interface. Management interface can be connected to an access port on the switch. Interface GigabitEthernet1/1/2 description ESXi Management switchport access vlan 10 switchport mode access! Interface GigabitEthernet1/1/3 description ESXi Trunk switchport trunk encapsulation dot1q switchport mode trunk end Procedure Step 1 Create two separate virtual switches to map to the virtual controller Service and Data Port. Navigate to ESX >Configuration >Networking and click Add Networking. Step 2 Select Virtual Machine and click Next.

Step 3 Create a vswitch, and assign a physical NIC to connect vWLC service port. The service port does not have to be connected to any part of the network (typically disconnected/unused), therefore any NIC (even disconnected), can be used for this vswitch.

Step 4 Click Next to continue. Step 5 Provide a Label, such as the example below, E.g. 'vWLC Service Port'.

Step 6 Select VLAN ID to be 'None (0)', as typically service port is an access port. Step 7 Click Next to continue. Step 8 In the below screenshot, we see vSwitch1 created for 'vWLC Service Port'. Click on 'Add Networking' to repeat for the Data Port. For the new vSwitch, select the physical NIC(s) connected on a trunk port if there are multiple NICs / portgroup assigned to an etherchannel on the switch.

Step 9 Add the NIC. Step 10 Click Next to continue. Step 11 Provide a label, e.g. 'vWLC Data Port'. Step 12 For VLAN ID, select ALL(4095) since this is connected to a switch trunk port. Step 13 Click Next until completing the steps to add the vswitch.

VMware Promiscuous Mode Definition— Promiscuous mode is a security policy which can be defined at the virtual switch or portgroup level in vSphere ESX/ESXi. A virtual machine, Service Console or VMkernel network interface in a portgroup which allows use of promiscuous mode can see all network traffic traversing the virtual switch. By default, a guest operating system's virtual network adapter only receives frames that are meant for it.

Placing the guest's network adapter in promiscuous mode causes it to receive all frames passed on the virtual switch that are allowed under the VLAN policy for the associated portgroup. This can be useful for intrusion detection monitoring or if a sniffer needs to to analyze all traffic on the network segment. The vWLC Data Port requires the assigned vSwitch to accept Promiscuous mode for proper operations.

Step 14 Locate vSwitch2 (assigned for vWLC Data Port), and click Properties. Step 15 Select the VMNet assigned to the vWLC Data Port, note the default Security Promiscuous Mode is set to Reject and click Edit. Step 16 In properties, select Security tab. Step 17 Check the box for Promiscuous Mode and select Accept. Step 18 Confirm the change and click Close to continue.

The virtual controller software will be posted as.ova package in the Cisco software center. Customers can download the.ova package and install similar to any other virtual application. Software comes with a free-60 day evaluation license. After the VM is started, the evaluation license can be activated and later a purchased license can be automatically installed and activated.

Step 19 Download the virtual controller OVA image to the local disk. Step 20 Use vSphere client >Deploy OVF Template to deploy vWLC. Procedure Step 1 Use either SMALL or LARGE *.ova that was downloaded and extracted to local storage.

Step 2 Specify the target OVF file, and click Next. Step 3 The target OVF will display the detail of vWLC being configured, no changes are required, and click Next. Step 4 Provide a name for the vWLC instance that will be created, and click Next.

Step 5 Leave default in the Disk Format, which is Thick Provision Lazy Zeroed, and click Next. Step 6 In the Network Mapping, note that there are 2 source Networks, predefined as Service Port and Data Port (also labeled in the description). Map these interfaces as required in the Destination Networks, and click Next. Note A reminder that the Simplified Controller Provisioning is enabled for new vWLC, using a web browser. A client PC wired connected to the Service Port segment will be able to access this feature upon installing vWLC. Step 7 The vWLC is ready to proceed in the installation, review the Deployment Settings, and click Finish.

Step 8 Once completed, select and power on the vWLC instance. Step 9 Allow the automated installation of vWLC to complete, which may take several minutes. Step 10 On the virtual machine console, the installation will show complete, and a reboot will be initiated.

Step 11 Upon reboot, VMware console will show ' Press any key to use this terminal as the default terminal.' It is important to click into the console window and press ANY key to access the terminal. Step 12 Once the vWLC is fully online, it will present the configuration wizard via CLI. The console port will give access to console prompt of Wireless LAN Controller.

So the VM can be provisioned with serial ports to connect to these. In the absence of serial ports, the VSphere Client Console will get connected to the console on vWLC. VMWare ESXi supports a virtual serial console port that can be added to vWLC VM. The serial port can be accessed in one of the following two ways: • Physical Serial Port on the Host: vWLC's virtual serial port will be mapped to the hardware serial port on the server. This option is limited to the # of physical serial port(s) on the host, if in a multi-tenant vWLC scenario, this may not be ideal. • Connect via Network: vWLC's virtual serial port can be accessed using telnet session from a remote machine to a specific port allocated for the VM on hypervisor.

For example, if the hypervisor's IP address is 10.10.10.10 and port allocated for a vWLC VM is 9090, using 'telnet 10.10.' , just like accessing a physical WLC's console using a Cisco terminal server, vWLC's serial console can be accessed. Procedure Step 1 In the vWLC Hardware tab, click ' Add'. Step 2 Select Serial Port, and click Next. Step 3 In this scenario, select ' Connect via Network'. Step 4 Select Network Backing >Server (VM listens for connection) Step 5 Port URI: telnet://: e.g. Step 6 Click Next to review options, and click Finish.

Step 7 Click OK to complete the configured settings. To enable for the serial via network, ESX must be configured to allow for such requests. Step 8 Navigate to the ESX >Configuration >Software >Security Profile, and click Properties.

Step 9 In the Firewall Properties >select / check VM serial port connected to vSPC, and click OK to finish with settings. Starting Up the vWLC Step 10 Start the virtual WLC, and select console to observe the first-time installation process.

Step 11 Monitor progress until the VM console shows that the vWLC has restarted (this is automatic). Step 12 At this time, open telnet session to vWLC such as in the example below. Step 13 Telnet session will now manage the console to vWLC. Note Only 1 mode of console can be operational at any time, such as VM console (by key-interrupt at startup), or serial console (physical/network).

It is not possible to maintain both at the same time. Step 14 Continue to wait until the vWLC has fully come online and prompt to start the configuration tool wizard. Step 15 Configure the management interface address / mask / gateway. Configure Management Interface VLAN ID if tagged. Continue with the remainder. Step 16 Similar to all network device(s), it is crucial and very important to configure NTP. The virtual controller must have correct clock as it is possible to have an incorrect clock on the ESX host, or from manual configuration, which may result in access points not joining in the process.

Step 17 Complete the configuration and allow the vWLC to Reset. Step 18 A suggestion is to ping the vWLC management interface to ensure that it has come online. Log into the vWLC. Step 19 You can perform 'show interface summary' and ping the gateway from the vWLC.

Step 20 Connect to vWLC management using a web browser. An alternative to configuring the vWLC using CLI through the VMware console, is using the simplified controller provisioning feature, applicable both in VMware or KVM deployment.

As mentioned early in this guide, any client PC wired connected accessing the network mapped to the vWLC Service Port will be able to use this feature. This feature is enabled after first boot from an non-configured vWLC, temporarily provides DHCP service on the Service Port segment, and assign PC clients a limited network address. The client PC can connect to the vWLC using a web browser.

Procedure Step 1 With a client PC connected to the vWLC mapped Service Port, it gets an address from a limited range of 192.168.1.3 through 192.168.1.14. The vWLC is assigned a fixed 192.168.1.1.

Step 2 From the client PC, open a browser and connect to the Simplified Setup wizard will navigate the admin through the minimal steps required to fully configure vWLC. The first step is creating the admin account, provide the admin username and password, then click Start Step 3 In step 1 of the simplified setup wizard, set up the vWLC with a system name, country, date/time (automatically taken from client PC clock) and NTP server. Also, define the management IP address, subnet mask, gateway and VLAN for the management interface. This assignment needs to be configured and available on the Data Port (trunk) from the initial VMware/KVM setup of network interfaces.

Step 4 In step 2, create the wireless network (SSID), security, and network/VLAN assignment as required. Optional is the inclusion of a Guest Network setup, a quick and simple step to add secure guest access with separate network and access method for guests. Step 5 In step 3 of the simplified setup, an admin can optimize the WLC setup for intended RF use, and taking advantages of Cisco Wireless LAN Controller best practices defaults.

Click Next to finalize the setup. To install FedoraOS, perform the following steps: Procedure Step 1 Install Fedora21 or later.

Click the followinglink to downloadFedora. Step 2 After installing Fedora, configure IP address to go to internet. In this scenario, two dedicated Linuxinterfaces/ports are used for vWLC.

Step 3 Find out your interface using ifconfig. Example: First interface - for uplink (service-port of WLC); no IP addressis required to this interface but should be connected and up. Second interface - for WLC Management interface; no IP address is required to this interfacebut should be connected and up. Third or fourth interface - for Linux accessibility; provide IP address to this interface, so that there is a network connectivity to the Linux box. Ov-nw In the above configuration: portgroup name='all_vlans', allows all VLANs. Portgroup name='vlan-152-untagged', allows only untagged VLAN that is 152.

Portgroup name='vlan-153', allows only 153 VLAN. Portgroup name='two-vlan', allows only two VLANs,that is, 152 and 153. Note Console to Fedora. GUI is required for VMM. Procedure Step 1 Open the terminal (command prompt).

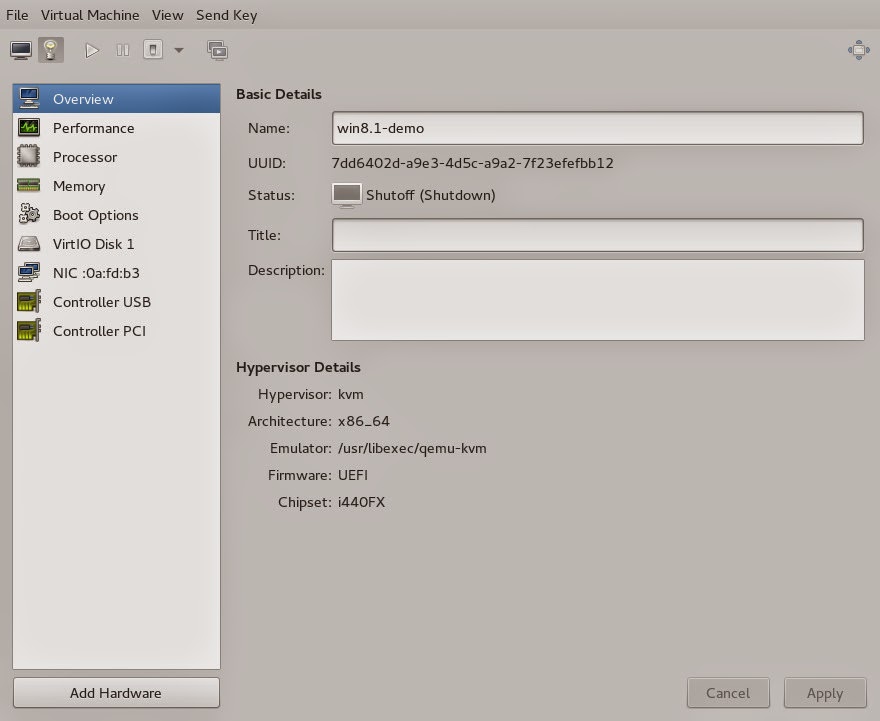

Step 2 Execute the command virt-manager. The Virt Manager(VMM) pop-up window appears. Step 3 Create a new virtual machine (VM). Step 4 Select the path.

Step 5 Select the ISO file of vWLC. Step 6 Select the memory and CPU. Step 7 Select the disk space.

Step 8 Name the VM. Step 9 Check the Customize configuration before install check box and then click Finish. (This helps to configure other options) Step 10 Click Add Hardware. The Add New Virtual Hardware window appears. This window helps you to configure service port, management interface, and serial connection: • Click Networkand do the following: —From the Network source drop-down list, choose the virtual network.(It is recommended to select the virtual network of service port of vWLC) — From the Portgroupdrop-down list, choose the port group configured in xml if there are many. — From the Device modeldrop-down list, choose virtio (only this is supported as of now) and then click Finish. • Repeat again by selecting Add Hardware >Network for virtual network of management interface.

Note This is similar to installation using Fedora. Procedure Step 1 Launch Virtual Machine Manager (VMM): • Launch VMM from GUI or type virt-manager from shell. The GUI takes you through the following steps to create the vWLC instance easily. • Choose an ISO image. • Choose Memory as 4 GB. • Choose CPU as 1.

• Provide a qcow2 image or raw image. • Click Customize configuration before install. • Click NIC, change device model to virtio, and change host device to VM-Service-Network. • Click Add hardware.

• In the new window, click Network, and change host device to VM-Mgmt-network and device model to virtio. • Click Begin installation. Step 2 From the command prompt, vWLC can be instantiated from shell as well with the following command (modify the filename and path as needed).

Note Before working on any other package or KVM/vswitch, check the Linux kernel. Make sure the kernel version is 3.12.36-38 or above. If the kernel version is not 3.12.36-38 or above, upgrade it by performing the following steps: Procedure Step 1 Install SLES 12 on the server.

Step 2 Once the server is up, copy the kernel rpm to the machine. Step 3 On a terminal, execute rpm --ivh.rpm. The rpm is installed and would take some time to configure. Step 4 Reboot the machine once the installation is complete, and verify that the latest kernel is loaded using uname --a. Right to Use Enabling additional access points supported by this controller product may require the purchase of supplemental or 'adder' licenses. You may remove supplemental licenses from one controller and transfer to another controller in the same product family.

NOTE: licenses embedded in the controller at time of shipment are not transferrable. By clicking 'I AGREE' (or 'I ACCEPT') below, you warrant and represent that you have purchased sufficient supplemental licenses for the access points to be enabled. All supplemental licenses are subject to the terms and conditions of the Cisco end user license agreement (together with any applicable supplemental end user license agreements, or SEULA's. Pursuant to such terms, Cisco is entitled to confirm that your access point enablement is properly licensed.

If you do not agree with any of the above, do not proceed further and CLICK 'DECLINE' below. [y/n]: Y Successfully added the license. Step 3 The AP adder licenses are installed and activated on the vWLC. You can view the installed licenses by typing the show license summary command: (Cisco Controller) >show license summary. Cisco Smart Software Licensing makes it easier to buy, deploy, track, and renew Cisco software by removing today’s entitlement barriers and providing information about your software install base.

This is a major change to Cisco’s software strategy, moving away from a PAK-based model to a new approach that enables flexibility and advanced consumer-based models. With Cisco Smart Software Licensing, you will have: • Visibility into devices and software that you have purchased and deployed • Automatic license activation • Product simplicity with standard software offers, licensing platform, and policies • Possibility of decreased operational costs You, your chosen partners, and Cisco can view your hardware, software entitlements, and eventually services in the Cisco Smart Software Manager interface. All Smart Software Licensed products, upon configuration and activation with a single token, will self-register, removing the need to go to a website and register product after product with PAKs. Instead of using PAKs or license files, Smart Software Licensing establishes a pool of software licenses or entitlements that can be used across your entire portfolio in a flexible and automated manner. Pooling is particularly helpful with RMAs because it eliminates the need to re-host licenses. You may self manage license deployment throughout your company easily and quickly in the Cisco Smart Software Manager. Through standard product offers, a standard license platform, and flexible contracts you will have a simplified, more productive experience with Cisco software.

Procedure Step 1 To activate Smart Licensing on the WLC go to Management >Software Activation >License Type. Step 2 Select Licensing Type as Smart-Licensing from the drop-down menu. Enter the DNS server IP address that will be used to resolve the Smart License and Smart call-home URLs in the call-home profile.

After this step, restart the controller under Commands >Restart. Step 3 Go to Management >Smart-License >Device registration.

Select action as Registration. Register the device by entering the copied token ID.

Step 4 Verify the status of Registration and Authorization under Management >Smart-License >Status. In the CSSM portal the device will show up under the Product Instances tab on the corresponding virtual account that the device was registered with. Step 5 Once APs join the WLC, entitlements are requested once in 24 hours and the status of entitlements can be viewed under Management >Smart-license >Status.

Cisco Prime Infrastructure version 3.0 is the minimum release required to centrally manage one or more Cisco Virtual Controller(s). CPI 3.0 provides configuration, software management, monitoring, reporting and troubleshooting of virtual controllers. Refer to Cisco Prime Infrastructure documentation as required for administrative and management support. Cisco Prime Compatibility Matrix: Procedure Step 1 Log into Cisco Prime Infrastructure server as root.

Step 2 Navigate to Inventory, and Device Management >Network Devices. Step 3 In Network Devices, click Add Device. Step 4 Enter the IP address and SNMP community string (Read/Write). By default, the SNMP RW for the controller is Private, and click Add. Step 5 Cisco Prime Infrastructure will discover and synchronize with the virtual controller, and click on the refresh button to update the screen. When the virtual controller is discovered, it will list as Managed, with good Reachability shown in green. Add any other virtual controller(s) at this point if available.

Step 6 The new controller will be listed in the Device Type, Cisco Virtual Series Wireless LAN Controller. In the early steps of installation, the Cisco Virtual Controller initially required an OVA file for new virtual appliance creation; however, maintaining virtual controller features and software upgrade require a common AES file downloadable from Cisco site. Procedure Step 1 Download the upgrade software *aes file to a target host (e.g. TFTP/FTP) or use HTTP file transfer.

Step 2 Same as for legacy controllers, navigate to the web GUI of the controller, COMMANDS >Download File. Select File Type, Transfer Mode, IP address, path and File Name (aes file).

Click Download button to start the process. Step 3 When the process is completed successfully, user will be prompted to Reboot to take into effect of the new software image. Click on the link to the Reboot Page to continue. Step 4 Click Save and Reboot. Step 5 Cisco Prime Infrastructure 3.0 can also be useful for upgrading virtual controller, or many virtual controllers at the same time. Navigate to Network Device. Select (check box) one or more virtual controller(s) >from the command pull-down select Download (TFTP/TFTP).

This example uses TFTP mode of image upgrade. Step 6 Provide the Download Type (Now / Scheduled) >New or Existing server IP address, path and Server File Name (*.aes upgrade software). Click Download to begin. Step 7 The following screen is an example of the AES image being transferred to the virtual controllers from a TFTP server. Step 8 Cisco Prime Infrastructure will update the status until the software has successfully been transferred. Step 9 Similar to the experience directly from the controller, when the transfer is complete a reboot is required. Navigate in Cisco Prime Infrastructure by selecting the virtual controller(s), and select from command pull-down, Reboot >Reboot Controllers.

Step 10 Cisco Prime Infrastructure will prompt for reboot parameters such as save configuration, etc. Click OK to continue. Step 11 Cisco Prime Infrastructure will notify the admin that the virtual controllers are being rebooted. Step 12 When complete, Cisco Prime Infrastructure will provide the result of the process. Copyright © 2015, Cisco Systems, Inc. All rights reserved.

Domain XML format • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • This section describes the XML format used to represent domains, there are variations on the format based on the kind of domains run and the options used to launch them. For hypervisor specific details consult the The root element required for all virtual machines is named domain. It has two attributes, the type specifies the hypervisor used for running the domain. The allowed values are driver specific, but include 'xen', 'kvm', 'qemu', 'lxc' and 'kqemu'. The second attribute is id which is a unique integer identifier for the running guest machine.

Inactive machines have no id value. Fv0 4dea22b31d52d8f32516782e98ab3fa0 A short description - title - of the domain Some human readable description... Name The content of the name element provides a short name for the virtual machine. This name should consist only of alpha-numeric characters and is required to be unique within the scope of a single host. It is often used to form the filename for storing the persistent configuration file.

Since 0.0.1 uuid The content of the uuid element provides a globally unique identifier for the virtual machine. The format must be RFC 4122 compliant, eg 3e3fce45-4f53-4fa7-bb8b82b. If omitted when defining/creating a new machine, a random UUID is generated. It is also possible to provide the UUID via a specification. Since 0.0.1, sysinfo since 0.8.7 title The optional element title provides space for a short description of the domain.

The title should not contain any newlines. Since 0.9.10.

Description The content of the description element provides a human readable description of the virtual machine. This data is not used by libvirt in any way, it can contain any information the user wants.

Since 0.7.2 metadata The metadata node can be used by applications to store custom metadata in the form of XML nodes/trees. Applications must use custom namespaces on their XML nodes/trees, with only one top-level element per namespace (if the application needs structure, they should have sub-elements to their namespace element). Since 0.9.10 There are a number of different ways to boot virtual machines each with their own pros and cons. Booting via the BIOS is available for hypervisors supporting full virtualization.

In this case the BIOS has a boot order priority (floppy, harddisk, cdrom, network) determining where to obtain/find the boot image. Hvm /usr/lib/xen/boot/hvmloader /var/lib/libvirt/nvram/guest_VARS.fd. Type The content of the type element specifies the type of operating system to be booted in the virtual machine. Hvm indicates that the OS is one designed to run on bare metal, so requires full virtualization.

Linux (badly named!) refers to an OS that supports the Xen 3 hypervisor guest ABI. There are also two optional attributes, arch specifying the CPU architecture to virtualization, and machine referring to the machine type.

The provides details on allowed values for these. Since 0.0.1 loader The optional loader tag refers to a firmware blob, which is specified by absolute path, used to assist the domain creation process.

It is used by Xen fully virtualized domains as well as setting the QEMU BIOS file path for QEMU/KVM domains. Xen since 0.1.0, QEMU/KVM since 0.9.12 Then, since 1.2.8 it's possible for the element to have two optional attributes: readonly (accepted values are yes and no) to reflect the fact that the image should be writable or read-only. The second attribute type accepts values rom and pflash. It tells the hypervisor where in the guest memory the file should be mapped.

For instance, if the loader path points to an UEFI image, type should be pflash. Moreover, some firmwares may implement the Secure boot feature. Attribute secure can be used then to control it. Since 2.1.0 nvram Some UEFI firmwares may want to use a non-volatile memory to store some variables. In the host, this is represented as a file and the absolute path to the file is stored in this element.

Moreover, when the domain is started up libvirt copies so called master NVRAM store file defined in qemu.conf. Download Lagu Ost Naruto Shippuden Newsong. If needed, the template attribute can be used to per domain override map of master NVRAM stores from the config file. Note, that for transient domains if the NVRAM file has been created by libvirt it is left behind and it is management application's responsibility to save and remove file (if needed to be persistent). Since 1.2.8 boot The dev attribute takes one of the values 'fd', 'hd', 'cdrom' or 'network' and is used to specify the next boot device to consider. The boot element can be repeated multiple times to setup a priority list of boot devices to try in turn.

Multiple devices of the same type are sorted according to their targets while preserving the order of buses. After defining the domain, its XML configuration returned by libvirt (through virDomainGetXMLDesc) lists devices in the sorted order. Once sorted, the first device is marked as bootable. Thus, e.g., a domain configured to boot from 'hd' with vdb, hda, vda, and hdc disks assigned to it will boot from vda (the sorted list is vda, vdb, hda, hdc). Similar domain with hdc, vda, vdb, and hda disks will boot from hda (sorted disks are: hda, hdc, vda, vdb). It can be tricky to configure in the desired way, which is why per-device boot elements (see,, and sections below) were introduced and they are the preferred way providing full control over booting order. The boot element and per-device boot elements are mutually exclusive.

Since 0.1.3, per-device boot since 0.8.8 smbios How to populate SMBIOS information visible in the guest. The mode attribute must be specified, and is either 'emulate' (let the hypervisor generate all values), 'host' (copy all of Block 0 and Block 1, except for the UUID, from the host's SMBIOS values; the call can be used to see what values are copied), or 'sysinfo' (use the values in the element). If not specified, the hypervisor default is used. Since 0.8.7 Up till here the BIOS/UEFI configuration knobs are generic enough to be implemented by majority (if not all) firmwares out there. However, from now on not every single setting makes sense to all firmwares.

For instance, rebootTimeout doesn't make sense for UEFI, useserial might not be usable with a BIOS firmware that doesn't produce any output onto serial line, etc. Moreover, firmwares don't usually export their capabilities for libvirt (or users) to check. And the set of their capabilities can change with every new release.

Hence users are advised to try the settings they use before relying on them in production. Bootmenu Whether or not to enable an interactive boot menu prompt on guest startup. The enable attribute can be either 'yes' or 'no'. If not specified, the hypervisor default is used. Since 0.8.3 Additional attribute timeout takes the number of milliseconds the boot menu should wait until it times out.

Allowed values are numbers in range [0, 65535] inclusive and it is ignored unless enable is set to 'yes'. Since 1.2.8 bios This element has attribute useserial with possible values yes or no. It enables or disables Serial Graphics Adapter which allows users to see BIOS messages on a serial port. Therefore, one needs to have defined.

Since 0.10.2 (QEMU only) there is another attribute, rebootTimeout that controls whether and after how long the guest should start booting again in case the boot fails (according to BIOS). The value is in milliseconds with maximum of 65535 and special value -1 disables the reboot. Hypervisors employing paravirtualization do not usually emulate a BIOS, and instead the host is responsible to kicking off the operating system boot. This may use a pseudo-bootloader in the host to provide an interface to choose a kernel for the guest. An example is pygrub with Xen. The Bhyve hypervisor also uses a host bootloader, either bhyveload or grub-bhyve.

/usr/bin/pygrub --append single. Bootloader The content of the bootloader element provides a fully qualified path to the bootloader executable in the host OS.

This bootloader will be run to choose which kernel to boot. The required output of the bootloader is dependent on the hypervisor in use. Since 0.1.0 bootloader_args The optional bootloader_args element allows command line arguments to be passed to the bootloader.

Since 0.2.3 When installing a new guest OS it is often useful to boot directly from a kernel and initrd stored in the host OS, allowing command line arguments to be passed directly to the installer. This capability is usually available for both para and full virtualized guests. Hvm /usr/lib/xen/boot/hvmloader /root/f8-i386-vmlinuz /root/f8-i386-initrd console=ttyS0 ks=/root/ppc.dtb /path/to/slic.dat. Type This element has the same semantics as described earlier in the loader This element has the same semantics as described earlier in the kernel The contents of this element specify the fully-qualified path to the kernel image in the host OS. Initrd The contents of this element specify the fully-qualified path to the (optional) ramdisk image in the host OS. Cmdline The contents of this element specify arguments to be passed to the kernel (or installer) at boot time. This is often used to specify an alternate primary console (eg serial port), or the installation media source / kickstart file dtb The contents of this element specify the fully-qualified path to the (optional) device tree binary (dtb) image in the host OS.

Since 1.0.4 acpi The table element contains a fully-qualified path to the ACPI table. The type attribute contains the ACPI table type (currently only slic is supported) Since 1.3.5 (QEMU only) When booting a domain using container based virtualization, instead of a kernel / boot image, a path to the init binary is required, using the init element. By default this will be launched with no arguments.

To specify the initial argv, use the initarg element, repeated as many time as is required. The cmdline element, if set will be used to provide an equivalent to /proc/cmdline but will not affect init argv. To set environment variables, use the initenv element, one for each variable. To set a custom work directory for the init, use the initdir element.

To run the init command as a given user or group, use the inituser or initgroup elements respectively. Both elements can be provided either a user (resp. Group) id or a name. Prefixing the user or group id with a + will force it to be considered like a numeric value. Without this, it will be first tried as a user or group name. Exe /bin/systemd --unit emergency.service some value /my/custom/cwd tester 1000 If you want to enable user namespace, set the idmap element.

The uid and gid elements have three attributes: start First user ID in container. It must be '0'.

Target The first user ID in container will be mapped to this target user ID in host. Count How many users in container are allowed to map to host's user. Some hypervisors allow control over what system information is presented to the guest (for example, SMBIOS fields can be populated by a hypervisor and inspected via the dmidecode command in the guest). The optional sysinfo element covers all such categories of information. Since 0.8.7.. LENOVO Fedora Virt-Manager 0.9.4 LENOVO 20BE0061MC 0B98401 Pro W1KS427111E. The sysinfo element has a mandatory attribute type that determine the layout of sub-elements, with supported values of: smbios Sub-elements call out specific SMBIOS values, which will affect the guest if used in conjunction with the smbios sub-element of the element.

Each sub-element of sysinfo names a SMBIOS block, and within those elements can be a list of entry elements that describe a field within the block. The following blocks and entries are recognized: bios This is block 0 of SMBIOS, with entry names drawn from: vendor BIOS Vendor's Name version BIOS Version date BIOS release date.

If supplied, is in either mm/dd/yy or mm/dd/yyyy format. If the year portion of the string is two digits, the year is assumed to be 19yy. Release System BIOS Major and Minor release number values concatenated together as one string separated by a period, for example, 10.22. System This is block 1 of SMBIOS, with entry names drawn from: manufacturer Manufacturer of BIOS product Product Name version Version of the product serial Serial number uuid Universal Unique ID number. If this entry is provided alongside a top-level element, then the two values must match. Sku SKU number to identify a particular configuration. Family Identify the family a particular computer belongs to.

BaseBoard This is block 2 of SMBIOS. This element can be repeated multiple times to describe all the base boards; however, not all hypervisors necessarily support the repetition.

The element can have the following children: manufacturer Manufacturer of BIOS product Product Name version Version of the product serial Serial number asset Asset tag location Location in chassis NB: Incorrectly supplied entries for the bios, system or baseBoard blocks will be ignored without error. Other than uuid validation and date format checking, all values are passed as strings to the hypervisor driver.. Vcpu The content of this element defines the maximum number of virtual CPUs allocated for the guest OS, which must be between 1 and the maximum supported by the hypervisor. Cpuset The optional attribute cpuset is a comma-separated list of physical CPU numbers that domain process and virtual CPUs can be pinned to by default. (NB: The pinning policy of domain process and virtual CPUs can be specified separately by cputune. If the attribute emulatorpin of cputune is specified, the cpuset specified by vcpu here will be ignored. Similarly, for virtual CPUs which have the vcpupin specified, the cpuset specified by cpuset here will be ignored.

For virtual CPUs which don't have vcpupin specified, each will be pinned to the physical CPUs specified by cpuset here). Each element in that list is either a single CPU number, a range of CPU numbers, or a caret followed by a CPU number to be excluded from a previous range. Since 0.4.4 current The optional attribute current can be used to specify whether fewer than the maximum number of virtual CPUs should be enabled. Since 0.8.5 placement The optional attribute placement can be used to indicate the CPU placement mode for domain process. The value can be either 'static' or 'auto', but defaults to placement of numatune or 'static' if cpuset is specified. Using 'auto' indicates the domain process will be pinned to the advisory nodeset from querying numad and the value of attribute cpuset will be ignored if it's specified.

If both cpuset and placement are not specified or if placement is 'static', but no cpuset is specified, the domain process will be pinned to all the available physical CPUs. Since 0.9.11 (QEMU and KVM only) vcpus The vcpus element allows to control state of individual vcpus. The id attribute specifies the vCPU id as used by libvirt in other places such as vcpu pinning, scheduler information and NUMA assignment. Note that the vcpu ID as seen in the guest may differ from libvirt ID in certain cases. Valid IDs are from 0 to the maximum vcpu count as set by the vcpu element minus 1. The enabled attribute allows to control the state of the vcpu.

Valid values are yes and no. Hotpluggable controls whether given vcpu can be hotplugged and hotunplugged in cases when the cpu is enabled at boot. Note that all disabled vcpus must be hotpluggable. Valid values are yes and no.

Order allows to specify the order to add the online vcpus. For hypervisors/platforms that require to insert multiple vcpus at once the order may be duplicated accross all vcpus that need to be enabled at once. Specifying order is not necessary, vcpus are then added in an arbitrary order. If order info is used, it must be used for all online vcpus.

Hypervisors may clear or update ordering information during certain operations to assure valid configuration. Note that hypervisors may create hotpluggable vcpus differently from boot vcpus thus special initialization may be necessary.

Hypervisors may require that vcpus enabled on boot which are not hotpluggable are clustered at the beginning starting with ID 0. It may be also required that vcpu 0 is always present and non-hotpluggable. Note that providing state for individual cpus may be necessary to enable support of addressable vCPU hotplug and this feature may not be supported by all hypervisors.

For QEMU the following conditions are required. Vcpu 0 needs to be enabled and non-hotpluggable. On PPC64 along with it vcpus that are in the same core need to be enabled as well. All non-hotpluggable cpus present at boot need to be grouped after vcpu 0. Since 2.2.0 (QEMU only) IOThreads are dedicated event loop threads for supported disk devices to perform block I/O requests in order to improve scalability especially on an SMP host/guest with many LUNs. Since 1.2.8 (QEMU only).

Iothreads The content of this optional element defines the number of IOThreads to be assigned to the domain for use by supported target storage devices. There should be only 1 or 2 IOThreads per host CPU. There may be more than one supported device assigned to each IOThread. Since 1.2.8 iothreadids The optional iothreadids element provides the capability to specifically define the IOThread ID's for the domain. By default, IOThread ID's are sequentially numbered starting from 1 through the number of iothreads defined for the domain.

The id attribute is used to define the IOThread ID. The id attribute must be a positive integer greater than 0.

If there are less iothreadids defined than iothreads defined for the domain, then libvirt will sequentially fill iothreadids starting at 1 avoiding any predefined id. If there are more iothreadids defined than iothreads defined for the domain, then the iothreads value will be adjusted accordingly. Since 1.2.15. 20 -1 1000000 -1 1000000 -1. Cputune The optional cputune element provides details regarding the cpu tunable parameters for the domain. Since 0.9.0 vcpupin The optional vcpupin element specifies which of host's physical CPUs the domain VCPU will be pinned to.

If this is omitted, and attribute cpuset of element vcpu is not specified, the vCPU is pinned to all the physical CPUs by default. It contains two required attributes, the attribute vcpu specifies vcpu id, and the attribute cpuset is same as attribute cpuset of element vcpu.

(NB: Only qemu driver support) Since 0.9.0 emulatorpin The optional emulatorpin element specifies which of host physical CPUs the 'emulator', a subset of a domain not including vcpu or iothreads will be pinned to. If this is omitted, and attribute cpuset of element vcpu is not specified, 'emulator' is pinned to all the physical CPUs by default.

It contains one required attribute cpuset specifying which physical CPUs to pin to. Iothreadpin The optional iothreadpin element specifies which of host physical CPUs the IOThreads will be pinned to. If this is omitted and attribute cpuset of element vcpu is not specified, the IOThreads are pinned to all the physical CPUs by default. There are two required attributes, the attribute iothread specifies the IOThread ID and the attribute cpuset specifying which physical CPUs to pin to. See the iothreadids for valid iothread values. Since 1.2.9 shares The optional shares element specifies the proportional weighted share for the domain. If this is omitted, it defaults to the OS provided defaults.

NB, There is no unit for the value, it's a relative measure based on the setting of other VM, e.g. A VM configured with value 2048 will get twice as much CPU time as a VM configured with value 1024.

Since 0.9.0 period The optional period element specifies the enforcement interval(unit: microseconds). Within period, each vcpu of the domain will not be allowed to consume more than quota worth of runtime. The value should be in range [1000, 1000000]. A period with value 0 means no value. Only QEMU driver support since 0.9.4, LXC since 0.9.10 quota The optional quota element specifies the maximum allowed bandwidth(unit: microseconds). A domain with quota as any negative value indicates that the domain has infinite bandwidth, which means that it is not bandwidth controlled.

The value should be in range [1000, 3709551] or less than 0. A quota with value 0 means no value. You can use this feature to ensure that all vcpus run at the same speed. Only QEMU driver support since 0.9.4, LXC since 0.9.10 emulator_period The optional emulator_period element specifies the enforcement interval(unit: microseconds). Within emulator_period, emulator threads(those excluding vcpus) of the domain will not be allowed to consume more than emulator_quota worth of runtime.

The value should be in range [1000, 1000000]. A period with value 0 means no value. Only QEMU driver support since 0.10.0 emulator_quota The optional emulator_quota element specifies the maximum allowed bandwidth(unit: microseconds) for domain's emulator threads(those excluding vcpus). A domain with emulator_quota as any negative value indicates that the domain has infinite bandwidth for emulator threads (those excluding vcpus), which means that it is not bandwidth controlled. The value should be in range [1000, 3709551] or less than 0.

A quota with value 0 means no value. Only QEMU driver support since 0.10.0 iothread_period The optional iothread_period element specifies the enforcement interval(unit: microseconds) for IOThreads. Within iothread_period, each IOThread of the domain will not be allowed to consume more than iothread_quota worth of runtime. The value should be in range [1000, 1000000].

An iothread_period with value 0 means no value. Only QEMU driver support since 2.1.0 iothread_quota The optional iothread_quota element specifies the maximum allowed bandwidth(unit: microseconds) for IOThreads. A domain with iothread_quota as any negative value indicates that the domain IOThreads have infinite bandwidth, which means that it is not bandwidth controlled.

The value should be in range [1000, 3709551] or less than 0. An iothread_quota with value 0 means no value. You can use this feature to ensure that all IOThreads run at the same speed.

Only QEMU driver support since 2.1.0 vcpusched and iothreadsched The optional vcpusched elements specifies the scheduler type (values batch, idle, fifo, rr) for particular vCPU/IOThread threads (based on vcpus and iothreads, leaving out vcpus/ iothreads sets the default). Valid vcpus values start at 0 through one less than the number of vCPU's defined for the domain. Valid iothreads values are described in the iothreadids. If no iothreadids are defined, then libvirt numbers IOThreads from 1 to the number of iothreads available for the domain. For real-time schedulers ( fifo, rr), priority must be specified as well (and is ignored for non-real-time ones).

The value range for the priority depends on the host kernel (usually 1-99). Since 1.2.13. Memory The maximum allocation of memory for the guest at boot time. The memory allocation includes possible additional memory devices specified at start or hotplugged later.

The units for this value are determined by the optional attribute unit, which defaults to 'KiB' (kibibytes, 2 10 or blocks of 1024 bytes). Valid units are 'b' or 'bytes' for bytes, 'KB' for kilobytes (10 3 or 1,000 bytes), 'k' or 'KiB' for kibibytes (1024 bytes), 'MB' for megabytes (10 6 or 1,000,000 bytes), 'M' or 'MiB' for mebibytes (2 20 or 1,048,576 bytes), 'GB' for gigabytes (10 9 or 1,000,000,000 bytes), 'G' or 'GiB' for gibibytes (2 30 or 1,073,741,824 bytes), 'TB' for terabytes (10 12 or 1,000,000,000,000 bytes), or 'T' or 'TiB' for tebibytes (2 40 or 1,099,511,627,776 bytes). However, the value will be rounded up to the nearest kibibyte by libvirt, and may be further rounded to the granularity supported by the hypervisor.

Some hypervisors also enforce a minimum, such as 4000KiB. In case is configured for the guest the memory element can be omitted. In the case of crash, optional attribute dumpCore can be used to control whether the guest memory should be included in the generated coredump or not (values 'on', 'off'). Unit since 0.9.11, dumpCore since 0.10.2 (QEMU only) maxMemory The run time maximum memory allocation of the guest. The initial memory specified by either the element or the NUMA cell size configuration can be increased by hot-plugging of memory to the limit specified by this element. The unit attribute behaves the same as for.

The slots attribute specifies the number of slots available for adding memory to the guest. The bounds are hypervisor specific. Note that due to alignment of the memory chunks added via memory hotplug the full size allocation specified by this element may be impossible to achieve. Since 1.2.14 supported by the QEMU driver. CurrentMemory The actual allocation of memory for the guest.

This value can be less than the maximum allocation, to allow for ballooning up the guests memory on the fly. If this is omitted, it defaults to the same value as the memory element. The unit attribute behaves the same as for memory... The optional memoryBacking element may contain several elements that influence how virtual memory pages are backed by host pages. Hugepages This tells the hypervisor that the guest should have its memory allocated using hugepages instead of the normal native page size. Since 1.2.5 it's possible to set hugepages more specifically per numa node.

The page element is introduced. It has one compulsory attribute size which specifies which hugepages should be used (especially useful on systems supporting hugepages of different sizes). The default unit for the size attribute is kilobytes (multiplier of 1024). If you want to use different unit, use optional unit attribute.

For systems with NUMA, the optional nodeset attribute may come handy as it ties given guest's NUMA nodes to certain hugepage sizes. From the example snippet, one gigabyte hugepages are used for every NUMA node except node number four. For the correct syntax see.

Nosharepages Instructs hypervisor to disable shared pages (memory merge, KSM) for this domain. Since 1.0.6 locked When set and supported by the hypervisor, memory pages belonging to the domain will be locked in host's memory and the host will not be allowed to swap them out, which might be required for some workloads such as real-time. For QEMU/KVM guests, the memory used by the QEMU process itself will be locked too: unlike guest memory, this is an amount libvirt has no way of figuring out in advance, so it has to remove the limit on locked memory altogether. Thus, enabling this option opens up to a potential security risk: the host will be unable to reclaim the locked memory back from the guest when it's running out of memory, which means a malicious guest allocating large amounts of locked memory could cause a denial-of-service attack on the host.

Because of this, using this option is discouraged unless your workload demands it; even then, it's highly recommended to set an hard_limit (see ) on memory allocation suitable for the specific environment at the same time to mitigate the risks described above. Since 1.0.6 source In this attribute you can switch to file memorybacking or keep default anonymous. Access Specify if memory is shared or private. This can be overridden per numa node by memAccess allocation Specify when allocate the memory. 1 128 2 67108864. Memtune The optional memtune element provides details regarding the memory tunable parameters for the domain. If this is omitted, it defaults to the OS provided defaults.

For QEMU/KVM, the parameters are applied to the QEMU process as a whole. Thus, when counting them, one needs to add up guest RAM, guest video RAM, and some memory overhead of QEMU itself. The last piece is hard to determine so one needs guess and try. For each tunable, it is possible to designate which unit the number is in on input, using the same values as for.

For backwards compatibility, output is always in KiB. Unit since 0.9.11 Possible values for all *_limit parameters are in range from 0 to VIR_DOMAIN_MEMORY_PARAM_UNLIMITED. Hard_limit The optional hard_limit element is the maximum memory the guest can use. The units for this value are kibibytes (i.e.

Blocks of 1024 bytes). Users of QEMU and KVM are strongly advised not to set this limit as domain may get killed by the kernel if the guess is too low, and determining the memory needed for a process to run is an; that said, if you already set locked in because your workload demands it, you'll have to take into account the specifics of your deployment and figure out a value for hard_limit that balances the risk of your guest being killed because the limit was set too low and the risk of your host crashing because it cannot reclaim the memory used by the guest due to locked.

Soft_limit The optional soft_limit element is the memory limit to enforce during memory contention. The units for this value are kibibytes (i.e.

Blocks of 1024 bytes) swap_hard_limit The optional swap_hard_limit element is the maximum memory plus swap the guest can use. The units for this value are kibibytes (i.e. Blocks of 1024 bytes). This has to be more than hard_limit value provided min_guarantee The optional min_guarantee element is the guaranteed minimum memory allocation for the guest.

The units for this value are kibibytes (i.e. Blocks of 1024 bytes). This element is only supported by VMware ESX and OpenVZ drivers... Numatune The optional numatune element provides details of how to tune the performance of a NUMA host via controlling NUMA policy for domain process. NB, only supported by QEMU driver. Since 0.9.3 memory The optional memory element specifies how to allocate memory for the domain process on a NUMA host. It contains several optional attributes.

Attribute mode is either 'interleave', 'strict', or 'preferred', defaults to 'strict'. Attribute nodeset specifies the NUMA nodes, using the same syntax as attribute cpuset of element vcpu. Attribute placement ( since 0.9.12) can be used to indicate the memory placement mode for domain process, its value can be either 'static' or 'auto', defaults to placement of vcpu, or 'static' if nodeset is specified. 'auto' indicates the domain process will only allocate memory from the advisory nodeset returned from querying numad, and the value of attribute nodeset will be ignored if it's specified. If placement of vcpu is 'auto', and numatune is not specified, a default numatune with placement 'auto' and mode 'strict' will be added implicitly. Since 0.9.3 memnode Optional memnode elements can specify memory allocation policies per each guest NUMA node. For those nodes having no corresponding memnode element, the default from element memory will be used.

Attribute cellid addresses guest NUMA node for which the settings are applied. Attributes mode and nodeset have the same meaning and syntax as in memory element. This setting is not compatible with automatic placement. QEMU Since 1.2.7.

800 /dev/sda 1000 /dev/sdb 500 0 0. Blkiotune The optional blkiotune element provides the ability to tune Blkio cgroup tunable parameters for the domain.

If this is omitted, it defaults to the OS provided defaults. Since 0.8.8 weight The optional weight element is the overall I/O weight of the guest.

The value should be in the range [100, 1000]. After kernel 2.6.39, the value could be in the range [10, 1000]. Device The domain may have multiple device elements that further tune the weights for each host block device in use by the domain. Note that multiple can share a single host block device, if they are backed by files within the same host file system, which is why this tuning parameter is at the global domain level rather than associated with each guest disk device (contrast this to the element which can apply to an individual ).

Each device element has two mandatory sub-elements, path describing the absolute path of the device, and weight giving the relative weight of that device, in the range [100, 1000]. After kernel 2.6.39, the value could be in the range [10, 1000]. Since 0.9.8 Additionally, the following optional sub-elements can be used: read_bytes_sec Read throughput limit in bytes per second. Since 1.2.2 write_bytes_sec Write throughput limit in bytes per second. Since 1.2.2 read_iops_sec Read I/O operations per second limit. Since 1.2.2 write_iops_sec Write I/O operations per second limit.

Since 1.2.2 Hypervisors may allow for virtual machines to be placed into resource partitions, potentially with nesting of said partitions. The resource element groups together configuration related to resource partitioning.

It currently supports a child element partition whose content defines the absolute path of the resource partition in which to place the domain. If no partition is listed, then the domain will be placed in a default partition.

It is the responsibility of the app/admin to ensure that the partition exists prior to starting the guest. Only the (hypervisor specific) default partition can be assumed to exist by default. Resource partitions are currently supported by the QEMU and LXC drivers, which map partition paths to cgroups directories, in all mounted controllers. Since 1.0.5 Requirements for CPU model, its features and topology can be specified using the following collection of elements. Core2duo Intel...

In case no restrictions need to be put on CPU model and its features, a simpler cpu element can be used. Since 0.7.6.. Cpu The cpu element is the main container for describing guest CPU requirements. Its match attribute specifies how strictly the virtual CPU provided to the guest matches these requirements. Since 0.7.6 the match attribute can be omitted if topology is the only element within cpu. Possible values for the match attribute are: minimum The specified CPU model and features describes the minimum requested CPU. A better CPU will be provided to the guest if it is possible with the requested hypervisor on the current host.

This is a constrained host-model mode; the domain will not be created if the provided virtual CPU does not meet the requirements. Exact The virtual CPU provided to the guest should exactly match the specification. If such CPU is not supported, libvirt will refuse to start the domain.

Strict The domain will not be created unless the host CPU exactly matches the specification. This is not very useful in practice and should only be used if there is a real reason. Since 0.8.5 the match attribute can be omitted and will default to exact. Sometimes the hypervisor is not able to create a virtual CPU exactly matching the specification passed by libvirt.

Since 3.2.0, an optional check attribute can be used to request a specific way of checking whether the virtual CPU matches the specification. It is usually safe to omit this attribute when starting a domain and stick with the default value. Once the domain starts, libvirt will automatically change the check attribute to the best supported value to ensure the virtual CPU does not change when the domain is migrated to another host. The following values can be used: none Libvirt does no checking and it is up to the hypervisor to refuse to start the domain if it cannot provide the requested CPU. With QEMU this means no checking is done at all since the default behavior of QEMU is to emit warnings, but start the domain anyway.

Partial Libvirt will check the guest CPU specification before starting a domain, but the rest is left on the hypervisor. It can still provide a different virtual CPU. Full The virtual CPU created by the hypervisor will be checked against the CPU specification and the domain will not be started unless the two CPUs match. Since 0.9.10, an optional mode attribute may be used to make it easier to configure a guest CPU to be as close to host CPU as possible.

Possible values for the mode attribute are: custom In this mode, the cpu element describes the CPU that should be presented to the guest. This is the default when no mode attribute is specified. This mode makes it so that a persistent guest will see the same hardware no matter what host the guest is booted on. Host-model The host-model mode is essentially a shortcut to copying host CPU definition from capabilities XML into domain XML.

Since the CPU definition is copied just before starting a domain, exactly the same XML can be used on different hosts while still providing the best guest CPU each host supports. The match attribute can't be used in this mode. Specifying CPU model is not supported either, but model's fallback attribute may still be used. Using the feature element, specific flags may be enabled or disabled specifically in addition to the host model. This may be used to fine tune features that can be emulated.

(Since 1.1.1). Libvirt does not model every aspect of each CPU so the guest CPU will not match the host CPU exactly. On the other hand, the ABI provided to the guest is reproducible. During migration, complete CPU model definition is transferred to the destination host so the migrated guest will see exactly the same CPU model even if the destination host contains more capable CPUs for the running instance of the guest; but shutting down and restarting the guest may present different hardware to the guest according to the capabilities of the new host.

Prior to libvirt 3.2.0 and QEMU 2.9.0 detection of the host CPU model via QEMU is not supported. Thus the CPU configuration created using host-model may not work as expected. Since 3.2.0 and QEMU 2.9.0 this mode works the way it was designed and it is indicated by the fallback attribute set to forbid in the host-model CPU definition advertised in. When fallback attribute is set to allow in the domain capabilities XML, it is recommended to use custom mode with just the CPU model from the host capabilities XML. Since 1.2.11 PowerISA allows processors to run VMs in binary compatibility mode supporting an older version of ISA. Libvirt on PowerPC architecture uses the host-model to signify a guest mode CPU running in binary compatibility mode.

Example: When a user needs a power7 VM to run in compatibility mode on a Power8 host, this can be described in XML as follows: power7. Host-passthrough With this mode, the CPU visible to the guest should be exactly the same as the host CPU even in the aspects that libvirt does not understand. Though the downside of this mode is that the guest environment cannot be reproduced on different hardware. Thus, if you hit any bugs, you are on your own. Further details of that CPU can be changed using feature elements. Migration of a guest using host-passthrough is dangerous if the source and destination hosts are not identical in both hardware and configuration.

If such a migration is attempted then the guest may hang or crash upon resuming execution on the destination host. Both host-model and host-passthrough modes make sense when a domain can run directly on the host CPUs (for example, domains with type kvm).

The actual host CPU is irrelevant for domains with emulated virtual CPUs (such as domains with type qemu). However, for backward compatibility host-model may be implemented even for domains running on emulated CPUs in which case the best CPU the hypervisor is able to emulate may be used rather then trying to mimic the host CPU model. Model The content of the model element specifies CPU model requested by the guest.

The list of available CPU models and their definition can be found in cpu_map.xml file installed in libvirt's data directory. If a hypervisor is not able to use the exact CPU model, libvirt automatically falls back to a closest model supported by the hypervisor while maintaining the list of CPU features.

Since 0.9.10, an optional fallback attribute can be used to forbid this behavior, in which case an attempt to start a domain requesting an unsupported CPU model will fail. Supported values for fallback attribute are: allow (this is the default), and forbid. The optional vendor_id attribute ( Since 0.10.0) can be used to set the vendor id seen by the guest. It must be exactly 12 characters long. If not set the vendor id of the host is used. Typical possible values are 'AuthenticAMD' and 'GenuineIntel'.

Vendor Since 0.8.3 the content of the vendor element specifies CPU vendor requested by the guest. If this element is missing, the guest can be run on a CPU matching given features regardless on its vendor. The list of supported vendors can be found in cpu_map.xml. Topology The topology element specifies requested topology of virtual CPU provided to the guest.

Three non-zero values have to be given for sockets, cores, and threads: total number of CPU sockets, number of cores per socket, and number of threads per core, respectively. Hypervisors may require that the maximum number of vCPUs specified by the cpus element equals to the number of vcpus resulting from the topology. Feature The cpu element can contain zero or more elements used to fine-tune features provided by the selected CPU model. The list of known feature names can be found in the same file as CPU models. The meaning of each feature element depends on its policy attribute, which has to be set to one of the following values: force The virtual CPU will claim the feature is supported regardless of it being supported by host CPU.

Require Guest creation will fail unless the feature is supported by host CPU. Optional The feature will be supported by virtual CPU if and only if it is supported by host CPU. Disable The feature will not be supported by virtual CPU.

Forbid Guest creation will fail if the feature is supported by host CPU. Since 0.8.5 the policy attribute can be omitted and will default to require. Cache Since 3.3.0 the cache element describes the virtual CPU cache. If the element is missing, the hypervisor will use a sensible default.

Level This optional attribute specifies which cache level is described by the element. Missing attribute means the element describes all CPU cache levels at once. Mixing cache elements with the level attribute set and those without the attribute is forbidden. Mode The following values are supported: emulate The hypervisor will provide a fake CPU cache data. Passthrough The real CPU cache data reported by the host CPU will be passed through to the virtual CPU.

Disable The virtual CPU will report no CPU cache of the specified level (or no cache at all if the level attribute is missing). Guest NUMA topology can be specified using the numa element. Since 0.9.8.... Each cell element specifies a NUMA cell or a NUMA node. Cpus specifies the CPU or range of CPUs that are part of the node. Memory specifies the node memory in kibibytes (i.e.

Blocks of 1024 bytes). Since 1.2.11 one can use an additional attribute to define units in which memory is specified. Since 1.2.7 all cells should have id attribute in case referring to some cell is necessary in the code, otherwise the cells are assigned ids in the increasing order starting from 0. Mixing cells with and without the id attribute is not recommended as it may result in unwanted behaviour. Since 1.2.9 the optional attribute memAccess can control whether the memory is to be mapped as 'shared' or 'private'.

This is valid only for hugepages-backed memory and nvdimm modules. This guest NUMA specification is currently available only for QEMU/KVM and Xen. A NUMA hardware architecture supports the notion of distances between NUMA cells. Since 3.10.0 it is possible to define the distance between NUMA cells using the distances element within a NUMA cell description. The sibling sub-element is used to specify the distance value between sibling NUMA cells. For more details, see the chapter explaining the system's SLIT (System Locality Information Table) within the ACPI (Advanced Configuration and Power Interface) specification....

Describing distances between NUMA cells is currently only supported by Xen and QEMU. If no distances are given to describe the SLIT data between different cells, it will default to a scheme using 10 for local and 20 for remote distances. It is sometimes necessary to override the default actions taken on various events. Not all hypervisors support all events and actions. The actions may be taken as a result of calls to libvirt APIs,,. Using virsh reboot or virsh shutdown would also trigger the event. Destroy restart restart poweroff.

The following collections of elements allow the actions to be specified when a guest OS triggers a lifecycle operation. A common use case is to force a reboot to be treated as a poweroff when doing the initial OS installation.

This allows the VM to be re-configured for the first post-install bootup. On_poweroff The content of this element specifies the action to take when the guest requests a poweroff. On_reboot The content of this element specifies the action to take when the guest requests a reboot.

On_crash The content of this element specifies the action to take when the guest crashes. Each of these states allow for the same four possible actions. Destroy The domain will be terminated completely and all resources released. Restart The domain will be terminated and then restarted with the same configuration. Preserve The domain will be terminated and its resource preserved to allow analysis.

Rename-restart The domain will be terminated and then restarted with a new name. QEMU/KVM supports the on_poweroff and on_reboot events handling the destroy and restart actions. The preserve action for an on_reboot event is treated as a destroy and the rename-restart action for an on_poweroff event is treated as a restart event. The on_crash event supports these additional actions since 0.8.4. Coredump-destroy The crashed domain's core will be dumped, and then the domain will be terminated completely and all resources released coredump-restart The crashed domain's core will be dumped, and then the domain will be restarted with the same configuration Since 3.9.0, the lifecycle events can be configured via the API. The on_lockfailure element ( since 1.0.0) may be used to configure what action should be taken when a lock manager loses resource locks.

The following actions are recognized by libvirt, although not all of them need to be supported by individual lock managers. When no action is specified, each lock manager will take its default action.

Poweroff The domain will be forcefully powered off. Restart The domain will be powered off and started up again to reacquire its locks. Pause The domain will be paused so that it can be manually resumed when lock issues are solved.

Ignore Keep the domain running as if nothing happened. Since 0.10.2 it is possible to forcibly enable or disable BIOS advertisements to the guest OS. Dll Suite 2013 Crack Download.

(NB: Only qemu driver support).. Pm These elements enable ('yes') or disable ('no') BIOS support for S3 (suspend-to-mem) and S4 (suspend-to-disk) ACPI sleep states. If nothing is specified, then the hypervisor will be left with its default value.

Note: This setting cannot prevent the guest OS from performing a suspend as the guest OS itself can choose to circumvent the unavailability of the sleep states (e.g. S4 by turning off completely). Hypervisors may allow certain CPU / machine features to be toggled on/off.. All features are listed within the features element, omitting a togglable feature tag turns it off. The available features can be found by asking for the and, but a common set for fully virtualized domains are: pae Physical address extension mode allows 32-bit guests to address more than 4 GB of memory.

Acpi ACPI is useful for power management, for example, with KVM guests it is required for graceful shutdown to work. Apic APIC allows the use of programmable IRQ management. Since 0.10.2 (QEMU only) there is an optional attribute eoi with values on and off which toggles the availability of EOI (End of Interrupt) for the guest.

Hap Depending on the state attribute (values on, off) enable or disable use of Hardware Assisted Paging. The default is on if the hypervisor detects availability of Hardware Assisted Paging. Viridian Enable Viridian hypervisor extensions for paravirtualizing guest operating systems privnet Always create a private network namespace. This is automatically set if any interface devices are defined. This feature is only relevant for container based virtualization drivers, such as LXC. Hyperv Enable various features improving behavior of guests running Microsoft Windows.